Microservices is a technique of developing cloud software systems. It accelerates application development, making way for innovation while facilitating the installation of advanced features. Microservices offer great value to companies by easing code development. Some of the most successful brands using microservices include Uber, Netflix, and Twitter. Read on to learn more about Cloud Microservices.

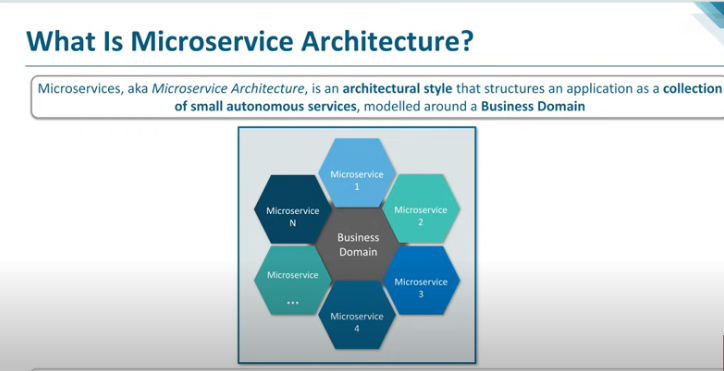

What is Microservices

Microservices, also known as Microservices architecture, is a cloud-native design concept where one application comprises various independently deployable and loosely connected more minor services or components. Worth mentioning is that microservices feature:

- A technology stack complete with a data management and database model

- They communicate over a blend of message brokers, event streaming, and REST APIs

Functionality or new features can be easily incorporated into microservices without interrupting the entire application.

What does the Microservices Architecture Comprise of?

Microservices architecture is a complex combination of databases, code, programming logic, and application functions spread over platforms and servers. Some essential elements of microservices applications combine these systems over a distributed system. Read on to understand the core elements of a microservices architecture that application architects and developers should know to thrive in the distributed service route.

· Microservices

Microservices form the base of a microservices architecture. It is the concept of dividing the application into small independent services written in any programming language, which communicate across lightweight protocols. Independent microservices allow software teams to apply iterative development procedures to develop and upgrade features with ease.

Teams should choose the right microservices size and remember that a combination of many segmented services triggers high management needs and overhead. Developers should decouple services thoroughly to reduce dependencies and encourage service autonomy.

· Service Mesh

The service mesh in a microservices architecture generates a robust messaging layer to ease communication. Service tooling leverages the sidecar design, yielding a proxy container located beside the containers with a combination of services or one microservice. The sidecar routes traffic from and to the container and manages communication with other sidecar proxies to optimize the maintenance of the service connection.

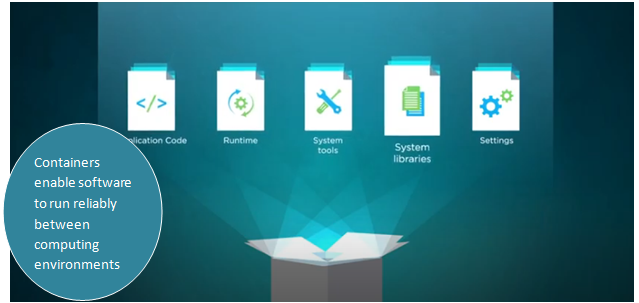

· Containers

Containers are software units that combine services and dependencies to maintain a stead unit across development, analysis, and production. Containers can enhance the deployment of app and time efficiency in a microservices architecture more effectively than other deployment processes.

Containers can deploy on-demand without affecting the performance of applications negatively. Further, developers can move, replace, and replicate containers with ease. The container’s consistency and independence are crucial for scaling particular segments of a microservices architecture based on workloads instead of the entire application.

One of the care providers in container management is Docker, which launched as an open-source container management platform. The company’s success triggered the evolution of significant tooling habitat to develop across it, creating prominent container orchestrators such as Kubernetes.

· Service Discovery

The number of active microservice occurrences during a deployment varies due to updates, workloads, or failure mitigation. Tracking the number of services in distributed network areas across application architecture can be difficult.

Service discovery ensures that service occurrences adapt in an evolving deployment and administer load across the microservices suitably. The service discovery element comprises three parts and two core discovery patterns which are:

- Service registry

- Service provider

- Service consumer

- Server-side discovery pattern

Client-side discovery patterns evaluate the service registry for a service provider. It also leverages a load balancing algorithm to pick the correct service occurrence available before making a request.

Data available in the service registry should be current to ensure related services locate their interconnected occurrences at runtime. If the service registry is inactive, it inhibits all services. As a result, enterprises utilize a distributed database like Apache Zookeeper to prevent constant failures.

· API Gateway

API gateways create the core layer of distraction between microservices and external clients to facilitate communication within a distributed architecture. They manage a significant percentage of the administrative and communication roles in a monolithic application to ensure the microservices remain lightweight. They can also cache, verify, and control requests while tracking and executing load balancing where necessary. Numerous API gateway options are available from open-source providers and cloud platform suppliers like Microsoft and Amazon.

What is the Difference Between Microservices and Monolithic Architecture?

Often, microservices are mistaken for monolithic architecture and SOA (service-oriented architecture). Read on to understand the difference between microservices and monolithic architecture.

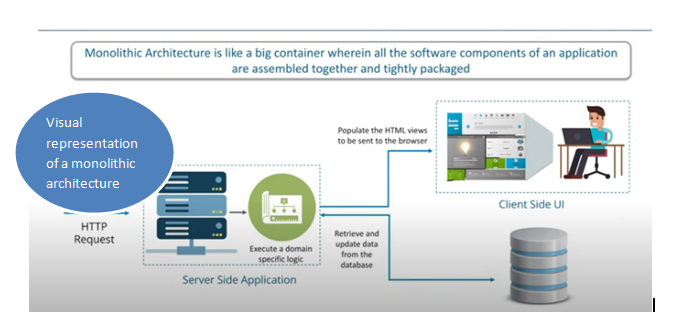

A monolithic architecture is developed as a single system where the end and front codes are deployed at once. A monolithic architecture comprises three parts:

- Client-side user interface

- Data interface

- Server-side application

A client-side user interface comprises JavaScript and HTML pages running through a browser. The server-side application manages HTTP requests, implements domain-based logic, reclaims and upgrades data from the database, and generates the HTML views sent to the browser.

On the other hand, Microservices architecture is the concept where a single application is built as a collection of small services. These services run in individual processes and communicate using lightweight techniques, usually an HTTP resource API. These services are developed around business capacity and can be deployed independently using fully automated deployment machinery. Other differences include:

On-demand scaling in a monolithic architecture can be difficult but easy on microservices architecture.

A large code base in monolithic architecture slows down Integrated Development Environment (IDE) and prolongs build time. However, projects in a microservices architecture are small in size and independent, reducing available development time.

The monolithic architecture features a shared database, while each module and project on a microservices architecture comes with individual databases.

Changing framework or technology on a microservices architecture is easy. However, executing the same lifecycle on a monolithic architecture can be tricky since every component is coupled tightly and relies on one another.

Monolithic Applications

Initially, every application based on a single server featured three layers:

- Database

- Business logic or application

- Presentation

These layers were developed in one intertwined slack available on one monolithic server within a data center. This design was standard in various industry technology and vertical architecture. An application is a compilation of code modules that play a specific function such as graphics rendering code, different types of business logic, a database, or logging.

In a monolithic architecture, users collaborate with the performance layer that communicates with the database and business logic before moving the information across the stack and back to the end-user. While this was an ideal way of organizing an application, it generated numerous failure points, which triggered prolonged downtimes in case of a code bug or hardware failure.

Self-healing was missing in monolithic applications, meaning that if a section of the system had issues, it would require human intervention to fix. Scaling on these layers required users to buy a whole new server. They had to buy a monolithic application running across a single server and distribute some users across the new system.

Such dividing triggered a chunk of user data that needed reconciliation daily. When mobile applications and web pages became popular, client needs were reduced, and advanced application methods became more common.

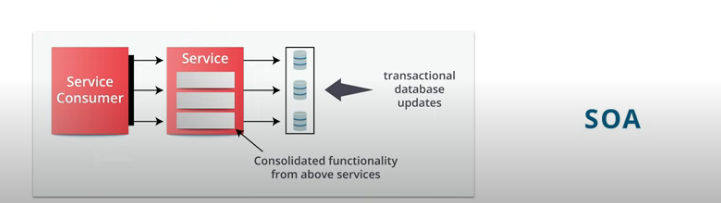

Service-Oriented Architecture (SOA)

Architecture started changing by the mid-2000s. Multiple layers started as self-supporting service silos and away from a single server. Applications were built to incorporate these services by leveraging an enterprise service bus to facilitate communication.

This concept allows administrators to scale these services independently by combining servers via proxy capabilities. It also allowed fewer development cycles by enabling engineers to only work on one section of the application service structure.

Decoupling services and promoting independent development called for API usage. APIs are a collection of syntax rules that services utilize to communicate. Further, there SOAs matched with the escalation of the VM (virtual machine), ensuring that physical server resources were efficient.

Services could be distributed faster in smaller virtual machines than preceding monolithic applications on exposed-metal servers. This mixture of technologies enhanced HA (high availability) solutions built across the services architecture and related infrastructure technologies.

Cloud Microservices

Today, cloud microservices further formulate the Service-oriented architecture strategy to combine functional granular services. Groups of microservices combine to form bigger microservices. This enhances their ability to update a single function code fasting a broad end-user application or general Service.

A microservice focuses on one concern like a web service, logging, or data search. This concept enhances flexibility. For instance, it updates the code of one function without redeploying or refactoring the entire microservices architecture. The failure spots are independent, stabilizing the application architecture.

This concept also allows the microservices to acquire self-healing characteristics. For instance, assuming a microservice in a cluster comprises three sub-functions. Suppose one of the sub-functions crashes; it will be repaired automatically. Orchestration tools like Kubernetes promote self-healing automatically.

· Microservices Collaborate with Dokcer Containers

Microservices architectures work closely with Docker containers, a packaging and distribution set up. While virtual machines have been utilized as a deployment technique for many years, containers are more efficient. They facilitate the deployment of code on Linux systems.

Containers are the ideal deployment transmitter for microservices. They can be launched in record time to ensure they are redeployed quickly after migration or failure and can scale fast to meet demands. Microservices have been interconnected with cloud-native structures, making them similar in various ways. Containers and microservices are detached, allowing them to function on any compatible operating system.

An operating system can exist on-premises, cloud, or public in a virtual machine monitor or exposed metal. With advancements in the cloud, Cloud-native practices and architectures have moved back to on-premise data centers.

Numerous organizations are developing local environments to share similar essential characteristics with the cloud, allowing one development practice across locations or cloud-native in any area. The cloud-native concept is actualized by adopting container technologies and microservices architectures.

How Cloud Microservices Interconnect

Microservices interconnect through communication. Every microservice lies in a different location and is deployed independently. DevOps teams have a self-supporting continuous delivery pipeline assigned to each microservice. Facilitating communication between microservices is not a simple process.

Consider various parameters, like scalability, latency, and throughput. There are numerous ways of classifying the various communication modes, with popular asynchronous and synchronous. Here are different techniques of facilitating communication between microservices.

· The Brokerless Design

This approach involves facilitating direct communication between microservices. Programmers can leverage http2 (WebSockets) for streaming or http for conventional request-response. Apart from load balancers and routers, there are no brokers between two or more microservices. You can directly link any service as long as you understand the API and service address they use.

Pros of the Brokerless Design

- This design has the least latency, is fast, and gives users the ultimate performance.

- Offers high throughput where more CPU cycles focus on executing tasks instead of routing.

- It’s easy to visualize and implement

- It is easy to debug

Cons

- A brokerless design is overly coupled. You will need more than one microservice to achieve a real-time update of additional payments occurring per minute.

- The service discovery in a brokerless design is non-scalable and non-responsive

- Connection difficulties

Often, brokerless designs hardly work. In this case, the broker design becomes critical.

The Broker (Messaging Bus) Design

Every communication in the broker design is transmitted through a collection of brokers. Brokers are server procedures running advanced routing algorithms. Each microservice links to a broker.

The microservice can discharge and draw messages through the same connection. The Service releasing messages is referred to as a publisher, while the receiver is a subscriber. Statements are presented to a specific topic, and a subscriber receives messages for topics it has subscribed to.

Pros

- Load balancing. Many messaging brokers backload balancing, simplifying the overall architecture and making it highly scalable. Some brokers such as RabbitMQ come with inbuilt retries ensuring the communication channel is reliable.

- Fan Out & Fan In. A messaging backend facilitates the distribution of workload and cumulates the results.

- Service discovery is not needed when utilizing a messaging backend. Each microservice plays the role of a client.

Cons

- Scaling the brokers for significantly distributed systems can be a daunting task.

- The broker requires storage, memory, and CPU resources to function appropriately.

The whole hops in a message bus trigger the general latency, especially in a remote procedure call (RPC) like use case.

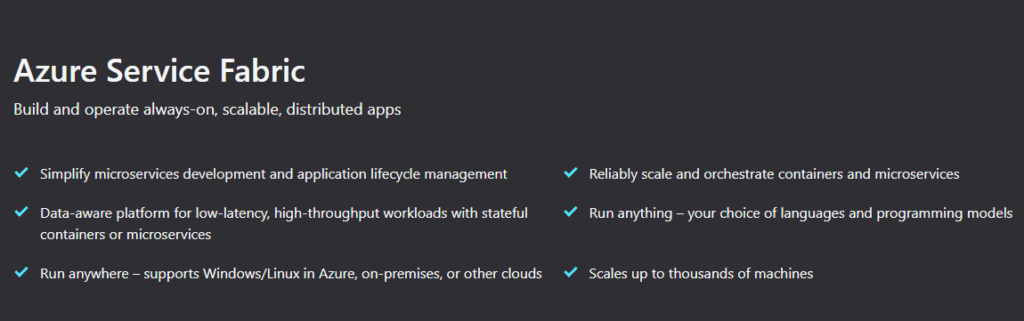

Azure Service Fabric

Azure Service Fabric is a distributed structure platform that allows users to pack, deploy, control reliable and scalable containers, and build microservices. Service Fabric also focuses on the compelling challenges involved in developing and managing cloud applications.

One of the core factors that make Service Fabric unique is its strong focus on developing stateful services. You can leverage the Service Fabric software development model or operate stateful containerized services written in any code or language.

Development teams can build Service Fabric groups in Linux on-premises, Windows servers, and other cloud computing services. Service Fabric supports numerous Microsoft services today, such as Microsoft Power BI, Azure SQL Database, Cortana, Microsoft Intune, and Azure Cosmos DB.

Benefits of Service Fabric

The foundation of advanced application development comprises of scalable and resilient service-based API tier. The power to host, track, and boost infrastructure to service numerous requests can be unmanageable, complex, and costly.

Service Fabric is a container host and microservices platform that manages this infrastructure problem. It also adds more features like deployment, provisioning, monitoring, and scaling of the available APIs.

Service Fabric is an Azure-based platform that operates on different types of cloud infrastructure. You can deploy it on other cloud services, like public and Azure, or an on-premises architectural style. Some of the benefits of Service Fabric include:

· Monitoring Applications

Service Fabric simplifies the idea of monitoring web applications and services and supporting infrastructure. The initial application monitoring level occurs via the instrumentation of the application code.

Service Fabric initiates a health style that development teams can use within the service code or application. It does so by offering a real-time view of a groups’ health through overseeing the underlying services and infrastructure it hosts.

This process ensures the delivery team understands possible problem triggers that can cascade into more significant issues leading to outages. Incorporating this model in custom business code instrumentation logic is crucial in ensuring the system remains healthy.

A Service Fabric territory is hierarchical, meaning that different thresholds, metrics, and rules can be described as health standards to demonstrate the tolerances of an error, warning, or health.

· API Management

Service Fabric merges with Azure API management allowing organizations to import service definitions. They can also describe sophisticated routing rules, monitoring, rate limiting, add access control, response caching, and event logging without changing application code.

· Environment Parity and Deployment Options

Today, the most popularly used method of deploying Service Fabric-based applications and services involves using the available Service Fabric infrastructure via Microsoft Azure. Using Azure infrastructure is not necessary. Remember, Service Fabric is a platform and not an Azure-based service.

As a result, development teams can deploy it on any infrastructure like development machines. This is a core benefit because it demonstrates that Service Fabric backs a flexible deployment process while ensuring that organizations that have already invested in already available on-premises infrastructure keep using it in collaboration with Service Fabric.

Amazon Web Services (AWS) RabbitMQ

AmazonMQ is a regulated RabbitMQ and Apache ActiveMQ regulated message broker service that simplifies the configuration and operation of AWS message brokers. It also minimizes operational responsibilities by controlling the provisioning setup and maintaining message brokers. Seeing that Amazon MQ links to your existing applications with industry-ideal protocols and APIs, development teams can move to AWS quickly without the need to rewrite code.

Benefits of RabbitMQ

RabbitMQ is a messaging intermediary that provides a common platform for your applications, enabling you to discharge and receive messages. It also gives messages a safe territory to dwell in until they are received. The advantages of RabbitMQ include:

- It covers more significant use cases

- It’s easy to integrate

- Queues pursue messages until they are entirely processed

- Order and delivery guarantee. Messages are sent and delivered to consumers in the order they were created.

- Third-party systems can consume and interact with the messages.

- Unlike the Java messaging service, which supports two models, RabbitMQ is an open-source messaging intermediary software that leverages the AMQP principle that supports four models.

Key Differences between Azure and AWS

- EC2 AWS users can set up their virtual memory system to preset images. On the other hand, Azure users have to select the virtual hard disk to generate a Virtual Machine that the third party presets. They should also specify the memory and number of cores needed.

- AWS provides a Virtual private cloud allowing users to develop isolated networks in the cloud. Azure delivers a Virtual network enabling development teams to establish isolated subnets, networks, private IP addresses, and route tables.

- AWS uses the pay-as-you-go system, where they charge per hour. While Azure uses the pay-as-you-go model, they charge per minute, making their pricing model more precise than AWS.

- Azure supports Hybrid cloud systems while AWS supports third-party or private cloud providers.

- AWS provides temporary storage, which is allocated when an instance is initiated and demolished once it is terminated. Azure provides limited storage via Block Blobs for object storage and page Blobs for Virtual machines.

- AWS comes with more configurations and features. It also offers more power, flexibility, and customization and supports the continuous integration of numerous tools. Azure is easy to use for people who are conversant with Windows because it’s a Windows-based platform. Integrating on-premises Windows servers with cloud occurrences is easy.

Is Azure Better than AWS?

Azure provides beneficial features like PaaS and hybrid solutions, which are crucial for today’s Cloud strategies. Many enterprises have experienced tremendous business growth by adopting Azure, making it a better choice than AWS. Azure has evolved and now comes with more capabilities and features than its competitors. Here are some examples to explain why Aure is superior to AWS.

· PaaS Capability

Both AWS and Azure provide similar IaaS features for networking, virtual machines, and storage. However, Azure has robust PaaS capabilities, which are critical for cloud infrastructure today.

Microsoft Azure PaaS provides the building blocks, tools, and environment that front-end application developers need to develop and automate cloud services quickly. It also offers the ideal DevOps connections critical for fine-tuning, managing, and monitoring the applications. In Azure PaaS, Microsoft executes most infrastructure management behind the scenes.

· Hybrid Solutions for Smooth Cloud Connectivity

Amazon is yet to adopt hybrid solutions while Azure is already using them. It smoothly links datacenters to the cloud. Azure offers a dependable platform that facilitates seamless mobility between the public and on-premises cloud.

· Security Offerings

Azure is designed according to the SFL (Security Development Lifecycle), an industry-leading assurance procedure. It integrates security at its private and leading services and data and remains protected and secured on the Azure cloud.

· Integrated Environment

Azure comes with an integrated environment to facilitate the development, testing, and deployment of Cloud applications. Clients reserve the right to choose frameworks. Further, open development languages make the Azure migration more flexible. Ready-made services such as mobile, web, media integrated with templates and APIs can be used to initiate Azure application development.

· .Net Compatibility

Azure is compatible with the .Net programming language, making Microsoft more superior than AWS and other competitors in the market. Azure is developed and optimized to consistently operate with old and advanced applications built with the .Net programming framework. Enterprises can transfer their Windows applications to Azure Cloud more easily than to AWS.

Finally

Are you looking for the best technique to master Microservices? You can access numerous courses or even attend webinar training online. Extensive knowledge of microservices makes you more marketable to employers. If you are an employer, hire SaaS developer who is conversant with other advanced technologies like machine learning to boost your company’s performance.